News Story

UMD Team Wins Award for Designing a Navigation Sensor for Mini Robots

Published July 11, 2022

Researchers from the University of Maryland were recently recognized for their innovative work in developing a low-powered acoustic sensing system for miniature robots.

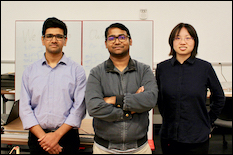

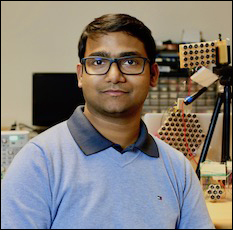

Computer science graduate students Yang Bai and Nakul Garg and their adviser, Assistant Professor Nirupam Roy, received a Best Paper Award at the 20th ACM International Conference on Mobile Systems, Applications and Services (MobiSys), held from June 27–July 1 in Portland, Oregon.

“SPiDR: Ultra-low-power Acoustic Spatial Sensing for Micro-robot Navigation,” details the development of an acoustic sensor that produces a cross-sectional map using one speaker and a set of microphones.

The researchers say their system consumes 10 times less power than those currently in use, while generating a depth-map with a more than 80% structural similarity score with the real-world scene. This core idea of structure-assisted acoustic sensing opens a realm of new possibilities for low-power perception, obstacle detection and navigation.

One example might be insect-sized robots, just a few inches in size, which can work as first responders to search for survivors in disastrous situations, perform mining or agricultural foraging, or even move heavy objects for organizational purposes.

These small robotic systems—when paired with autonomous navigation technology—can create new possibilities in a wide range of applications, including precision farming, disaster management and surveillance. But there are still many challenges that need to be solved, however, before testing these tiny robots in real world scenarios that involve locomotion, communication and navigation.

One of the key challenges is designing the navigation sensor, says Bai, a second-year doctoral student and one of the paper’s primary authors. While light detection and ranging (LiDAR) technology and radar have nearly solved the navigation sensing problem for self-driving vehicles, these existing techniques cannot be applied on tiny robots because of constraints like limited energy, computational power, and budget for manufacturing.

Bai says her work enables navigation for these low-power tiny robots because it’s just a few centimeters in size and has a low manufacturing cost.

“The key idea is to break away from scanning the scene angle by angle,” she explains. “We designed a microstructure to transmit the spatially coded signal. It sends unique patterns of a signal in each direction, so that the reflected signal also carries the information of the direction and distance.”

And while it is challenging to have enough spatial diversity of signal with a single omnidirectional source, the UMD team was able to leverage sound’s interaction with small structures to create a 3D-printed passive filter—called a stencil—which can project spatially coded signals on a region at a fine granularity.

The system receives a linear combination of the reflections from nearby objects and applies a novel power-aware depth-map reconstruction algorithm. The algorithm first estimates the approximate locations of the objects in the scene. Then once the researchers highlight certain regions of interest, they repeatedly zoom in to those locations to increase the resolution.

“This paper is a step toward a broader vision of reducing hardware complexity for sensing on the edge-IoT platforms,” says Roy, who also has an appointment in the University of Maryland Institute for Advanced Computer Studies. “It strives to relax strict hardware requirements in spatial sensing on resource-constrained devices by formulating it primarily as a computation and inference problem. This leverages the capabilities of advanced machine intelligence to bridge the limitations of the unsophisticated hardware toward achievable performance.”

Go here to see a video overview of this work.

—Story by Melissa Brachfeld